n8n Kubernetes Deployment: How to Run n8n at Scale with High Availability

As automation becomes a core part of business operations, more teams are turning to n8n—an open-source workflow automation tool—to streamline repetitive tasks, integrate services, and orchestrate data flows. However, running n8n at scale requires more than just spinning up a container. That’s where n8n Kubernetes deployment comes in.

In this guide, we’ll explore how to deploy n8n on Kubernetes clusters for production-ready automation infrastructure, covering architectural considerations, step-by-step setup, best practices, and how Groove Technology helps organizations scale securely.

Why Deploy n8n on Kubernetes?

Kubernetes offers a robust platform for managing automation workloads at scale. When deploying n8n, Kubernetes allows organizations to dynamically scale worker pods in response to workload demands by monitoring Redis queue lengths or CPU usage metrics. This automatic horizontal scaling helps maintain workflow execution speed and prevent delays during peak usage.

High availability is another core benefit. Kubernetes ensures redundancy through replica sets and anti-affinity rules that distribute pods across different nodes. This approach reduces the risk of total service interruption due to node failure and supports zero-downtime upgrades.

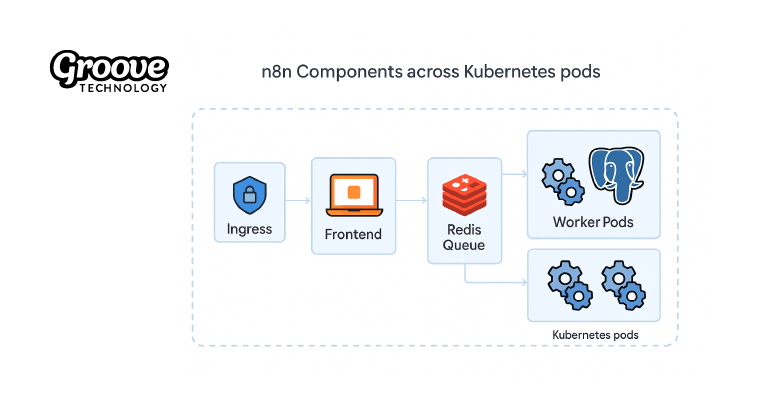

Additionally, Kubernetes enables process isolation by separating core n8n components—like API handling, webhook listening, and worker execution—into distinct pods or services. This architectural separation boosts performance and security.

From a DevOps perspective, Kubernetes integrates seamlessly with tools like Helm, GitOps platforms, and CI/CD pipelines, allowing teams to manage infrastructure as code. This results in faster deployments, easier rollbacks, and consistent environments across development and production.

Finally, with built-in support for monitoring and observability, Kubernetes lets you track application health, latency, and failure rates using Prometheus and visualize system behavior in Grafana. This visibility is critical for maintaining automation platform performance over time.

Real Use Cases of n8n on Kubernetes

To better understand the practical application of n8n in Kubernetes environments, let’s explore two real-world use cases that demonstrate how modular Kubernetes architecture helps scale workflow automation.

Use Case 1: High-Traffic Webhook Handling for an E-Commerce Platform

A client in the retail space needed to handle high volumes of order-related webhooks from Shopify and sync them with a custom ERP system. The architecture was designed to isolate webhook intake from workflow execution. The frontend pod handled all incoming webhooks exposed via Ingress with SSL termination, ensuring fast, secure delivery. Redis acted as the buffering layer, queuing webhook payloads. Multiple worker pods were deployed with Horizontal Pod Autoscaling to process workflows in parallel. PostgreSQL stored customer workflows, logging each successful sync or failure for audit purposes. With this setup, the platform achieved 99.99% availability even during sales campaigns.

Use Case 2: Internal Automation for a SaaS Product Team

An enterprise SaaS company used n8n to automate internal operations like daily Slack digests, deployment status reports, and integration between Jira and Notion. Running on Azure Kubernetes Service (AKS), n8n was deployed with customized Helm charts. The team separated responsibilities across pods: one for manual triggers via UI, another for scheduled tasks. Secrets were securely managed with Azure Key Vault injected into Kubernetes Secrets. Logging and metrics collection used Prometheus and Grafana, offering visibility into slow-running jobs and error patterns. With this setup, the team could safely iterate on workflows while maintaining stability across production and staging.

These use cases show how Kubernetes enables scalable, secure, and maintainable n8n deployments by structuring responsibilities across pods and integrating with robust tooling.

Step-by-Step: How to Deploy n8n onKubernetes

To begin deploying n8n on Kubernetes, start by preparing a suitable cluster. Managed Kubernetes services such as AWS EKS, Azure AKS, and Google GKE offer easy provisioning, built-in monitoring, and native integration with cloud services. Alternatively, you can self-host with tools like kubeadm or k3s for lightweight or on-premise setups. Ensure your cluster includes at least three worker nodes, each with a minimum of 2 vCPUs and 4GB of RAM, along with an Ingress controller and cert-manager for routing and HTTPS support.

Next, provision your PostgreSQL database and Redis message queue. These services can be deployed using Helm charts, custom manifests, or as managed cloud services. Store their access credentials securely using Kubernetes Secrets. Once provisioned, configure environment variables for n8n to connect to these services.

You can then deploy n8n itself using Helm charts or raw YAML manifests. Define deployments or StatefulSets for the frontend, specify ConfigMaps for environment variables, and use Persistent Volume Claims if local storage is required. If deploying with Helm, customize values for scaling, secrets, and domain routing within a values.yaml file.

To expose n8n externally, define Ingress resources that map your domain to the frontend service. Cert-manager can be configured with Let’s Encrypt to automatically issue and renew TLS certificates, enabling HTTPS for secure communication.

Finally, enable queue mode by setting the EXECUTIONS_MODE=queue environment variable and supplying Redis credentials. In this setup, the frontend handles triggers and passes execution jobs into the Redis queue, where multiple worker pods process them asynchronously. This ensures better concurrency and load distribution.

Set EXECUTIONS_MODE=queue and configure Redis host and credentials. Worker pods will subscribe to the queue and execute tasks in parallel.

Best Practices for n8n Kubernetes Deployments

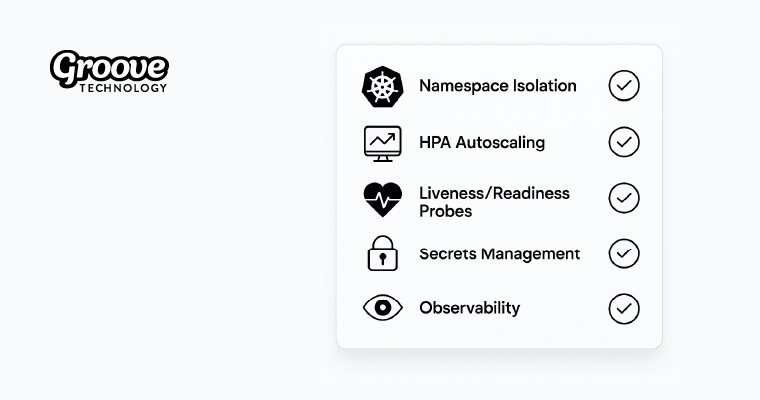

To ensure a stable, secure, and scalable n8n Kubernetes deployment, it’s essential to isolate your services within a dedicated namespace. Namespace isolation helps maintain better RBAC policies, reduce accidental cross-environment interactions, and facilitate cleaner management of deployment-specific resources.

One of the most powerful features Kubernetes provides is the ability to autoscale pods. For n8n, you can configure a Horizontal Pod Autoscaler (HPA) to dynamically increase or decrease the number of worker pods based on metrics such as CPU usage or Redis queue length. This allows your automation system to handle usage spikes without overprovisioning infrastructure during idle periods.

It’s equally important to use liveness and readiness probes. These probes help Kubernetes identify when a pod is unhealthy or not ready to receive traffic. For n8n, a liveness probe can monitor the /healthz endpoint, restarting the container if it becomes unresponsive, while readiness probes ensure that traffic is only routed to healthy pods during rolling updates or bootstrapping.

Managing sensitive credentials securely is non-negotiable in a production setup. Externalizing secrets—by storing environment variables in Kubernetes Secrets or tools like HashiCorp Vault—keeps them separate from code and minimizes exposure risk. This also aligns with best practices in compliance-focused industries.

Observability is another cornerstone of enterprise-grade deployments. Set up Prometheus to collect performance metrics such as workflow execution time, queue depth, and memory usage. Visualize this data in Grafana to monitor health trends, and use Fluent Bit or Loki to ship logs for centralized error tracing and debugging.

Finally, ensure your PostgreSQL database is backed up regularly. You can use Kubernetes-native tools like Velero or Stash to snapshot persistent volumes, or configure cron-based jobs that use pg_dump to export the database content to a secure backup store. This ensures business continuity in case of accidental deletions, failed upgrades, or cluster failures.

Groove Technology’s Expertise in n8n Kubernetes Deployments

Groove Technology brings over 10 years of software outsourcing development experience, working with clients across Australia, Europe, the UK, and the US. Our developers are fully equipped to help you deploy and scale automation platforms like n8n using Kubernetes. In addition to deep Kubernetes expertise, our team has strong technical capabilities in full-stack development, including Python, Node.js, React Native, and the Microsoft technology stack.

Our services include:

- Kubernetes-native architecture planning

- Helm-based or custom manifest deployments

- Redis and PostgreSQL provisioning and hardening

- Ingress, TLS, and DNS configuration for secure web access

- CI/CD integration using GitHub Actions, GitLab, or ArgoCD

- Monitoring stack setup with Grafana, Prometheus, and alerting tools

- Integration of custom APIs and backend logic using modern tech stacks like Python, Node.js, and .NET

Whether you’re starting small or running thousands of workflows per hour, Groove Technology helps you scale with confidence.

Final Thoughts

Kubernetes gives n8n the power to grow from a single-node automation tool into a distributed, enterprise-grade platform. With proper architecture, configuration, and DevOps practices, you can ensure high availability, security, and performance.

Need help deploying n8n on Kubernetes? Contact Groove Technology and let’s build your automation infrastructure together.